Supervision

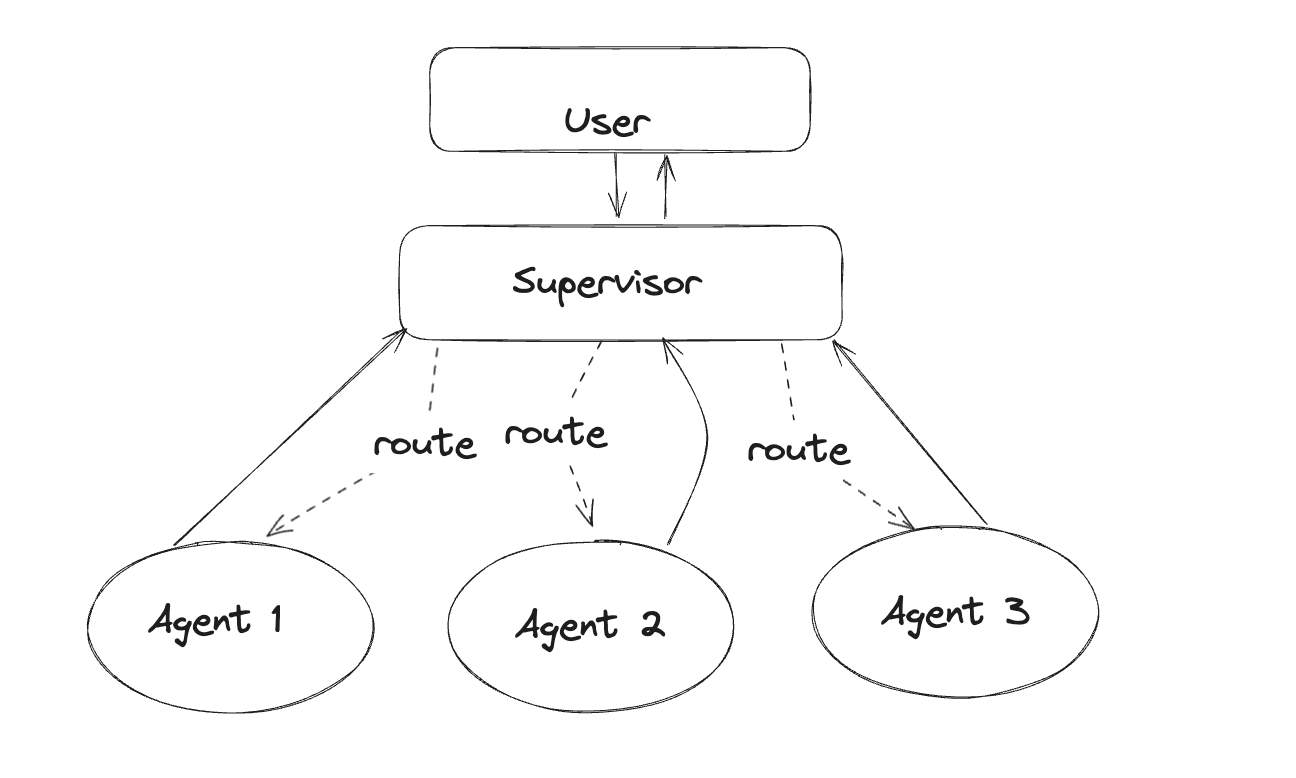

Agent Supervisor¶

The previous example routed messages automatically based on the output of the initial researcher agent.

We can also choose to use an LLM to orchestrate the different agents.

Below, we will create an agent group, with an agent supervisor to help delegate tasks.

To simplify the code in each agent node, we will use the AgentExecutor class from LangChain. This and other "advanced agent" notebooks are designed to show how you can implement certain design patterns in LangGraph. If the pattern suits your needs, we recommend combining it with some of the other fundamental patterns described elsewhere in the docs for best performance.

Before we build, let's configure our environment:

# %%capture --no-stderr

# %pip install -U langchain langchain_openai langchain_experimental langsmith pandas

import getpass

import os

def _set_if_undefined(var: str):

if not os.environ.get(var):

os.environ[var] = getpass(f"Please provide your {var}")

_set_if_undefined("OPENAI_API_KEY")

_set_if_undefined("LANGCHAIN_API_KEY")

_set_if_undefined("TAVILY_API_KEY")

# Optional, add tracing in LangSmith

os.environ["LANGCHAIN_TRACING_V2"] = "true"

os.environ["LANGCHAIN_PROJECT"] = "Multi-agent Collaboration"

Create tools¶

For this example, you will make an agent to do web research with a search engine, and one agent to create plots. Define the tools they'll use below:

from typing import Annotated, List, Tuple, Union

from langchain_community.tools.tavily_search import TavilySearchResults

from langchain_core.tools import tool

from langchain_experimental.tools import PythonREPLTool

tavily_tool = TavilySearchResults(max_results=5)

# This executes code locally, which can be unsafe

python_repl_tool = PythonREPLTool()

Helper Utilities¶

Define a helper function below, which make it easier to add new agent worker nodes.

from langchain.agents import AgentExecutor, create_openai_tools_agent

from langchain_core.messages import BaseMessage, HumanMessage

from langchain_openai import ChatOpenAI

def create_agent(llm: ChatOpenAI, tools: list, system_prompt: str):

# Each worker node will be given a name and some tools.

prompt = ChatPromptTemplate.from_messages(

[

(

"system",

system_prompt,

),

MessagesPlaceholder(variable_name="messages"),

MessagesPlaceholder(variable_name="agent_scratchpad"),

]

)

agent = create_openai_tools_agent(llm, tools, prompt)

executor = AgentExecutor(agent=agent, tools=tools)

return executor

We can also define a function that we will use to be the nodes in the graph - it takes care of converting the agent response to a human message. This is important because that is how we will add it the global state of the graph

def agent_node(state, agent, name):

result = agent.invoke(state)

return {"messages": [HumanMessage(content=result["output"], name=name)]}

Create Agent Supervisor¶

It will use function calling to choose the next worker node OR finish processing.

from langchain.output_parsers.openai_functions import JsonOutputFunctionsParser

from langchain_core.prompts import ChatPromptTemplate, MessagesPlaceholder

members = ["Researcher", "Coder"]

system_prompt = (

"You are a supervisor tasked with managing a conversation between the"

" following workers: {members}. Given the following user request,"

" respond with the worker to act next. Each worker will perform a"

" task and respond with their results and status. When finished,"

" respond with FINISH."

)

# Our team supervisor is an LLM node. It just picks the next agent to process

# and decides when the work is completed

options = ["FINISH"] + members

# Using openai function calling can make output parsing easier for us

function_def = {

"name": "route",

"description": "Select the next role.",

"parameters": {

"title": "routeSchema",

"type": "object",

"properties": {

"next": {

"title": "Next",

"anyOf": [

{"enum": options},

],

}

},

"required": ["next"],

},

}

prompt = ChatPromptTemplate.from_messages(

[

("system", system_prompt),

MessagesPlaceholder(variable_name="messages"),

(

"system",

"Given the conversation above, who should act next?"

" Or should we FINISH? Select one of: {options}",

),

]

).partial(options=str(options), members=", ".join(members))

llm = ChatOpenAI(model="gpt-4-1106-preview")

supervisor_chain = (

prompt

| llm.bind_functions(functions=[function_def], function_call="route")

| JsonOutputFunctionsParser()

)

Construct Graph¶

We're ready to start building the graph. Below, define the state and worker nodes using the function we just defined.

import operator

from typing import Annotated, Any, Dict, List, Optional, Sequence, TypedDict

import functools

from langchain_core.prompts import ChatPromptTemplate, MessagesPlaceholder

from langgraph.graph import StateGraph, END

# The agent state is the input to each node in the graph

class AgentState(TypedDict):

# The annotation tells the graph that new messages will always

# be added to the current states

messages: Annotated[Sequence[BaseMessage], operator.add]

# The 'next' field indicates where to route to next

next: str

research_agent = create_agent(llm, [tavily_tool], "You are a web researcher.")

research_node = functools.partial(agent_node, agent=research_agent, name="Researcher")

# NOTE: THIS PERFORMS ARBITRARY CODE EXECUTION. PROCEED WITH CAUTION

code_agent = create_agent(

llm,

[python_repl_tool],

"You may generate safe python code to analyze data and generate charts using matplotlib.",

)

code_node = functools.partial(agent_node, agent=code_agent, name="Coder")

workflow = StateGraph(AgentState)

workflow.add_node("Researcher", research_node)

workflow.add_node("Coder", code_node)

workflow.add_node("supervisor", supervisor_chain)

Now connect all the edges in the graph.

for member in members:

# We want our workers to ALWAYS "report back" to the supervisor when done

workflow.add_edge(member, "supervisor")

# The supervisor populates the "next" field in the graph state

# which routes to a node or finishes

conditional_map = {k: k for k in members}

conditional_map["FINISH"] = END

workflow.add_conditional_edges("supervisor", lambda x: x["next"], conditional_map)

# Finally, add entrypoint

workflow.set_entry_point("supervisor")

graph = workflow.compile()

Invoke the team¶

With the graph created, we can now invoke it and see how it performs!

for s in graph.stream(

{

"messages": [

HumanMessage(content="Code hello world and print it to the terminal")

]

}

):

if "__end__" not in s:

print(s)

print("----")

{'supervisor': {'next': 'Coder'}}

----

Python REPL can execute arbitrary code. Use with caution.

{'Coder': {'messages': [HumanMessage(content="The code `print('Hello, World!')` was executed, and the output is:\n\n```\nHello, World!\n```", name='Coder')]}}

----

{'supervisor': {'next': 'FINISH'}}

----

for s in graph.stream(

{"messages": [HumanMessage(content="Write a brief research report on pikas.")]},

{"recursion_limit": 100},

):

if "__end__" not in s:

print(s)

print("----")

{'supervisor': {'next': 'Researcher'}}

----

{'Researcher': {'messages': [HumanMessage(content='**Research Report on Pikas**\n\nPikas are small mammals related to rabbits, known for their distinctive chirping sounds. They inhabit some of the most challenging environments, particularly boulder fields at high elevations, such as those found along the treeless slopes of the Southern Rockies, where they can be found at altitudes of up to 14,000 feet. Pikas are well-adapted to cold climates and typically do not fare well in warmer temperatures.\n\nRecent studies have shown that pikas are being impacted by climate change. Research by Peter Billman, a Ph.D. student from the University of Connecticut, indicates that pikas have moved upslope by approximately 1,160 feet. This upslope retreat is a direct response to changing climatic conditions, as pikas seek cooler temperatures at higher elevations.\n\nPikas are also known to be industrious foragers, particularly during the summer months when they gather vegetation to create haypiles for winter sustenance. Their behavior is encapsulated in the saying, "making hay while the sun shines," reflecting their proactive approach to survival in harsh conditions.\n\nThe effects of climate change on pikas are not limited to the Southern Rockies. Studies published in Global Change Biology suggest that climate change is influencing pikas even in areas where they were previously thought to be less vulnerable, such as the Northern Rockies. These findings point to a broader trend of pikas moving to higher elevations, a behavior that may indicate a search for cooler, more suitable habitats.\n\nMoreover, researchers are exploring the possibility that pikas at lower elevations may have developed warm adaptations that could be beneficial for their future survival, given the ongoing climatic shifts. This line of research could help conservationists understand how pikas might cope with a warming world.\n\nIn conclusion, pikas are a species that not only fascinate with their unique behaviors and adaptations but also serve as indicators of environmental changes. Their upslope migration in response to climate change highlights the urgency for understanding and mitigating the effects of global warming on mountain ecosystems and the species that inhabit them.\n\n**Sources:**\n- [Colorado Sun](https://coloradosun.com/2023/08/27/colorado-pika-population-climate-change/)\n- [Wildlife.org](https://wildlife.org/climate-change-affects-pikas-even-in-unlikely-areas/)', name='Researcher')]}}

----

{'supervisor': {'next': 'FINISH'}}

----